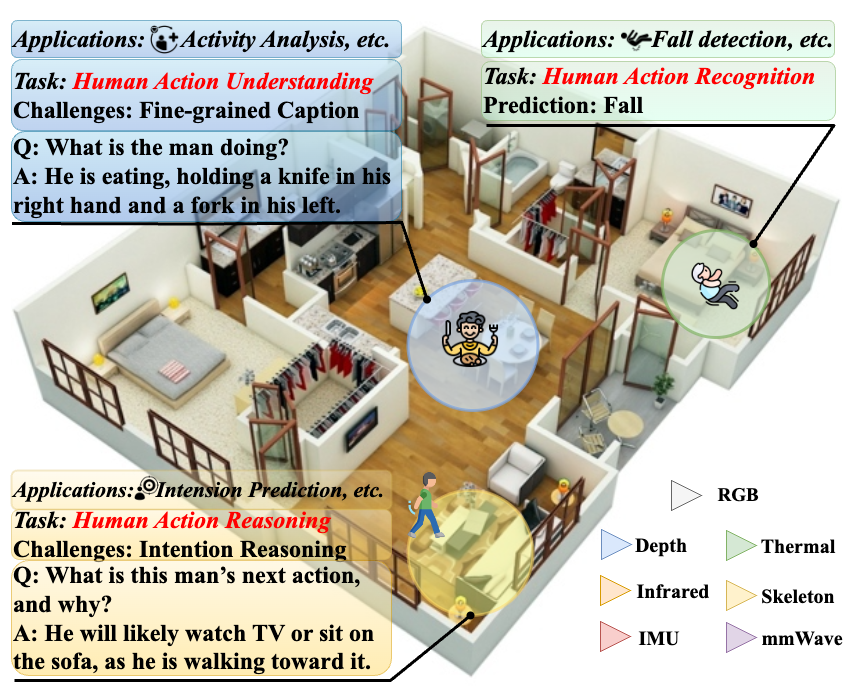

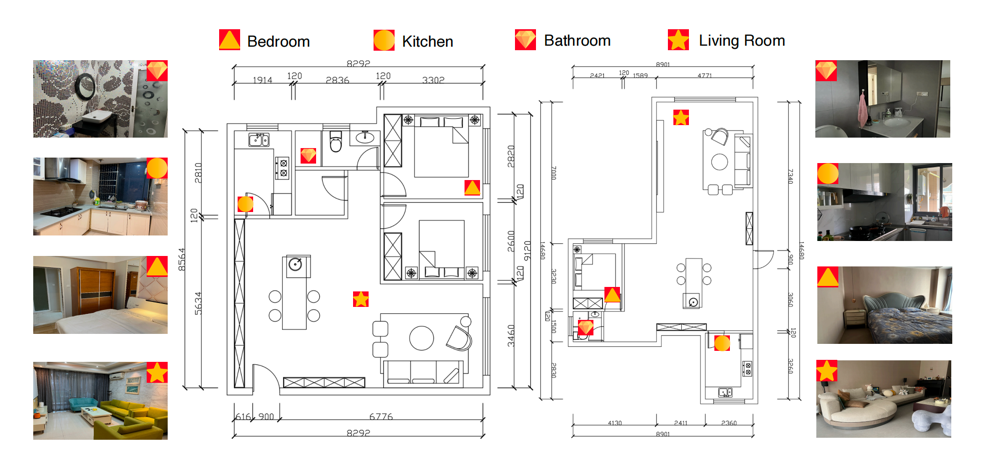

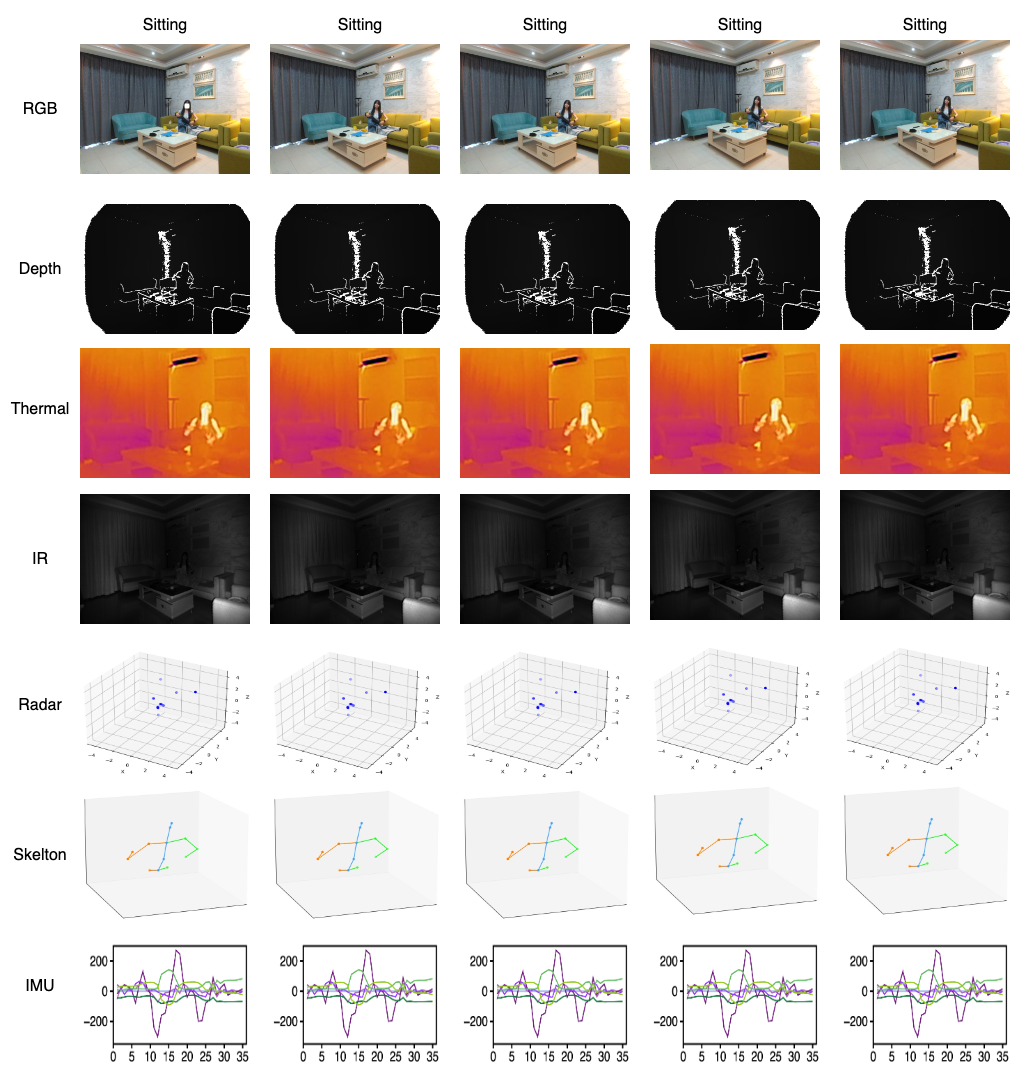

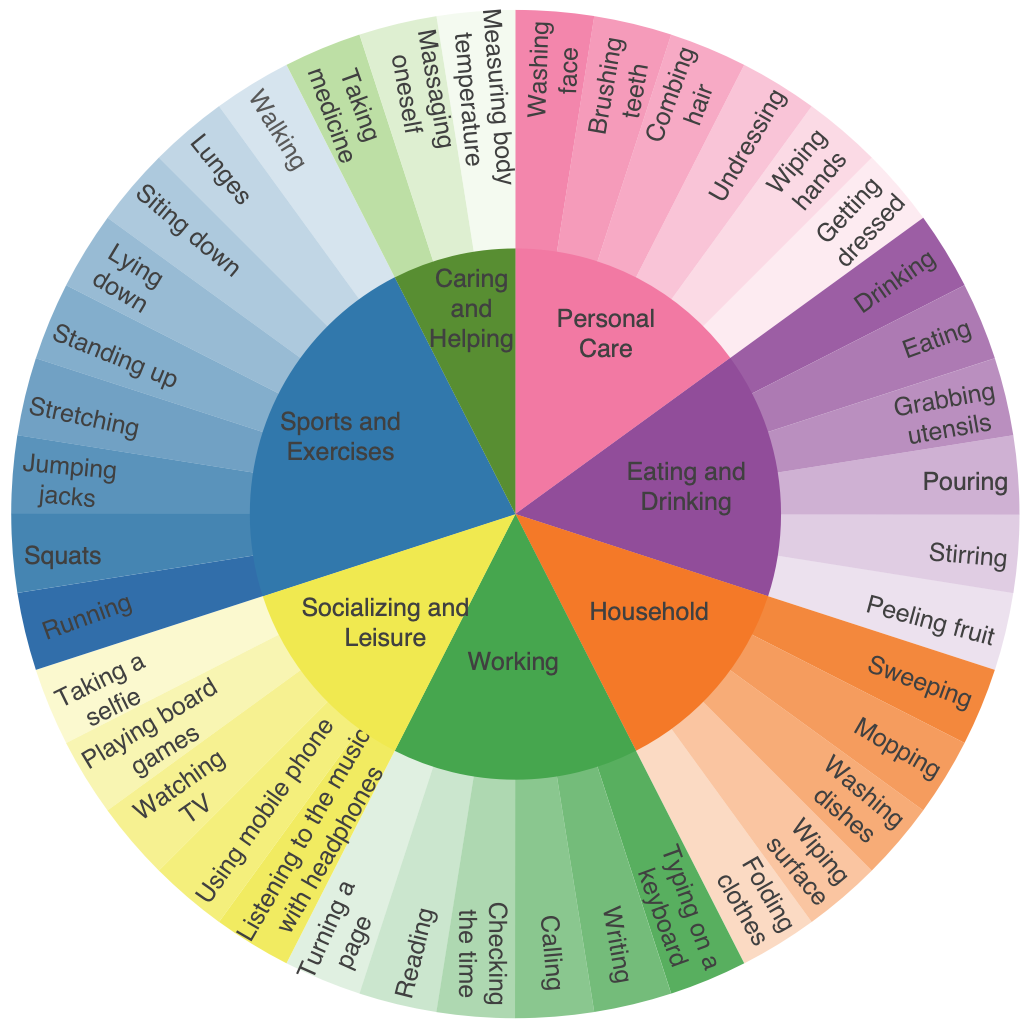

CUHK-X

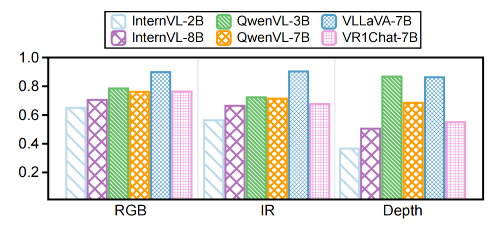

A Large-Scale Multimodal Dataset and Benchmark for Human Action Recognition, Understanding and Reasoning

CUHK

CUHK

UIUC

UIUC

Columbia University

Columbia University

PITT University

PITT University

Due to preparing a competition, we release a example data in this version. Will release all data soon!